Wednesday, January 26, 2011

my Social Network

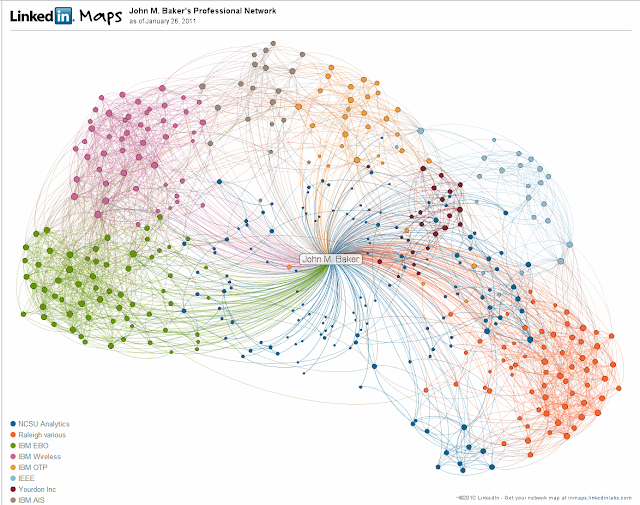

I am a sucker for visualization of data through graphs.

For example, IBM has many eyes which allows you to see data in many different ways AND it has a gallery of what others have done.

But I just got invited to build a graph of my LinkedIn social network.

This is so cool.

It derives clusters of associated people (in my case I have eight) and then asked me to figure out some semantic label for a cluster. As you can see from my graph I have a lot of associations with people I used to work with at IBM in different business units. Some of these people are still active in IBM and many are working elsewhere. I also have a cluster for my xYourdon colleagues, and current IEEE work. The odd ball is the" Raleigh various" category based on my belonging to several associations where networking is a primary purpose of the association. So everyone is connected to everyone else.

Patterns of Success - Kevin Jackson

I worked with Kevin Jackson at IBM as part of our Emerging Business Organization for Wireless.While there, he was the Worldwide Sales Executive for an IBM software product that allowed mobile devices to connect to enterprise servers. My team often used this product when we built a customer solution.

Today Kevin is a Director of Cloud Computing Services at NJVC.

John - Kevin, before we get into the Patterns of Success could you tell me a bit about NJVC? To start with I could not find the company name spelled out on the website? What does NJVC stand for?

Kevin - Well NJVC is not an acronym for any official company name. The company had a unique beginning about ten years ago as a joint venture between two native Alaskan firms to manage the IT infrastructure of the National Geospatial-

John - And what are you doing as the Director of Cloud Computing Services?

Kevin - The NGA collects, stores, and distributes massive quantities of image data and associated information. They are always looking for innovative means to accomplish this mission. I joined NJVC to help identify advanced technology to accomplish this for NGA and other customers. For the first six months at NJVC I worked as a mission watch officer in the network operations center. This taught me the details of the agencies infrastructure. During that time I looked at how Cloud Computing could be applied in support of the NGA.

John - And you write the blog Cloud Musings. In the December post you were speaking of the initiative by the Federal CIO (Vivek Kundra) to reduce IT costs by requiring all federal agencies and departments to move at least three applications to the cloud over the next 18 months. Do you think this is too aggressive?

Kevin – Aggressive? Yes, but this type of push is sorely needed. In fact Vivek Kundra started looking at Cloud Computing as a means of increasing efficiency and reducing cost when he first came in two years ago. He worked closely with the Federal CIO Council which launched two initiatives, the Federal Data Center Consolidation Initiative, and the Federal Cloud Computing Initiative.

John - So is your primary customer, NGA, going to participate in these initiatives?

Kevin - Well, all the federal agencies are required as part of the budget process to examine cost savings from one or both of these initiatives. NGA as a combat support agency of the DOD is required to meet that mandate. If you look at the DOD they have been leaders in the use of Cloud Computing.

John - When we talk about Federal Cloud Computing, are we not talking about two distinct clouds. A DOD cloud with its own security and all the other federal departments in a separate cloud?

Kevin - Every organization has there own network with access and authentication. But in order to work, the networks are connected with each other and the rest of the internet. Instead of thinking of different clouds based on government department, think of the content and functionality being provided as requiring different cloud infrastructures. For example, the NGA provides top secret imaging to the DOD. This is held on a very secure network. However if you go to Amazon.com you will also find public charts and maps provided by NGA.

John - As the Director of Cloud Computing Services at NJVC what kind of work do you do for your customers?

Kevin - We will consult with a customer to help them decide on how and when to move to a cloud. We help them understand how to potentially collaborate with other government agencies and clouds. For example could they take advantage of the DISA RACE? If the decision is to move forward, we can implement and maintain the cloud infrastructure. At our core, NJVC is an Operations and Sustainment business. I think that the data center of the future will involve building and operating infrastructure that you own as well as leveraging cloud based services to accomplish the mission.

John - Interesting. I recently had an interview with Toufic Boubez where he talks about how his new company (MetaFor) is developing tools to allow applications management in a "Data Center" that can be many servers located in your facilities or located in a cloud.

Kevin - Absolutely. And the brokering of services is key to making that work.

John - Lets turn to the Patterns of Success. In your area of expertiese of cloud computing for the federal government what have you seen work well in the adoption of cloud computing?

Kevin - Well first off, cloud computing is still in its early days, so I would have to say that the jury is still out on if it will be a successful strategy for the federal government. For some business models it is wildly successful but for other business models it will fail. The trick for the customer is to understand what their business model is and see if it a good fit for a cloud implementation.

John - Give me an example of a successful matching of a business model to a specific cloud implementation.

Kevin - The US Army leveraged an instance of SalesForce.com to support its recruiting program. SalesForce is a customer relationship management model and the Army treated each of its candidates as a customer. They could have campaigns, call logs, candidate information, etc etc. The beauty for the US Army was that they did not have to build a custom recruiting application costing millions of dollars and taking many months/years to implement. The SalesForce.com instance was immediately available for use, it was global, and could scale to the numbers of recruiters and candidates out there.

John - It seems that one of the cornerstone arguments around cloud computing is the significantly improved ROI.

Kevin - You were asking about what happens when the business model does not match the cloud implementation. Look at the example of the City of Los Angeles. They signed a contract with Google to provide Gmail for all city employees. After the contract was signed the LAPD raised a concern. They had a policy that anyone with access to their email needed to have a background check. Could Google prove that anyone on the Google cloud had such a background check? Turned out to be a bad fit between the business model of the consumer and the business model of the provider.

John - Any other Patterns of Success?

Kevin - Its important that you have clear measurable metrics, and that during implementation and operations of a cloud you monitor those metrics.

John - It seems to me that one of the classic metrics for cloud is a total cost of ownership reduction in moving from client/server custom applications to cloud based services.

Kevin - Yes. However it is important not to transition to cloud computing solely on a cost based metric.

John - OK. In addition to cost per user per month, what other metrics should be used?

Kevin - Agility. The ability to provide IT Resources to customers when they need them. Because cloud computing had its roots in the commercial sector, employees would come to work and ask why they could not get a Google search within their office application just like they had at home. They demanded the agility they were getting at home.

John - There are several flavors of cloud computing. At one level we have vendors providing applications or application portfolios (Google, Microsoft) at another level we have infrastructure providers like Amazon Web Services where I can move the custom application that I developed to run on the cloud provided by Amazon. Do you think that in the future a custom application will be assembled from components provided by vendor(s), along with those that I develop to a standard API, and then run in an environment offered by other vendor(s)? For example, an IRS tax application of the future would run on a federal cloud and combine specific tax application components with publicly available search engines (e.g.Google or Microsoft)?

Kevin - While there are platforms like the Google App platform or the SalesForce platform, I think that because the federal government is so large and complex it will have its own platform that new applications will use. Perhaps they will decide not to re-invent a search engine and will procure and certify Google search to be part of this federal platform. But there will be a lot of common components that all government entities will use, and those wishing to access the government systems will use.

John - What have been some of the Failures to Launch?

Kevin - A large federal department tried to morph existing standing contracts with vendors in order to support cloud computing and this failed. The terms of the existing contract restricted the government's ability to implement the cloud business model. Each application project had to be developed independently from all the others and could not take advantage of a multi-tenant cloud environment.

John - Any other common failures you have seen?

Kevin - Yes. I call it the cloud nirvana syndrome. Because cloud is new they figure that whatever they want to do, cloud will make it better.

John - The classic Gartner Hype Cycle (see below and notice where private cloud computing and cloud computing are)

Kevin - It is a lack of education usually.

John - Remember that Cloud Camp we were both at a couple years ago? I had run the session on Failure to Launch and it seemed at that time that all the failures came from basic software engineering mistakes. Not the exotic newness of cloud technology. Have you seen similar situations?

Kevin - Lack of Project Management seems to be the big problem I have seen. One of the over-arcing themes I have seen is that groups that fail tend to focus on the technology and groups that succeed focus on the business model.

John - When I talk with CIOs and ask about cloud computing one of the most common issues raised is control of sensitive information. They just do not trust cloud vendors yet to hold their confidential operational information. Even if the cloud vendor can show the use of similar security programs that are used in the CIO's data center.

Kevin - Yes. In my opinion the technology of the security is already adequate. It is a business model that gives more control of the data to people that are not employees. That is the issue.

John - Finally, what do you think the NEXT BIG THING will be for cloud computing in the federal government three years from now?

Kevin - I think the semantic web will allow web services to adapt themselves to the context of a specific user interaction with the web. These web services will self-configure to adjust to what your particular needs and requirements are. That enabling technology will allow dynamic virtual clouds to self organize. Instead of getting services from a cloud provider, they will come from a much broader ecosystem.

John - So how would this affect the day-to-day job of an imaging analyst at the NGA?

Kevin - The time and cost to get a particular task accomplished would drop significantly. We have already seen how our current internet has lowered the cost of finding and using information. With a semantic web, this will be even more significant. So it would be more like the analyst explaining the problem that needs to be solved and the cloud creating a way to get that specific answer on-the-fly.

John - Thanks sharing your insights into this exciting emerging technology.

Wednesday, January 19, 2011

Patterns of Success - Peter Hill

Peter Hill is a recent acquaintance that I met through Capers Jones. He lives in Melbourne Australia and runs an organization called the International Software Benchmarking Standards Group (more about that in the interview). I found him to have a very deep insight into the fundamental reasons why software projects are successful.

Peter - The name ISBSG is a bit unfortunate now because our focus is less on benchmarking standards and more on estimation and project management. In the early 1990's a group of IT professionals came together because they felt that the industry would benefit from the collection of data on software development and analysis of that data to see if there were practices that could be publicized to help the industry improve its performance.

They came up with a questionnaire to collect that data. Then there were discussions with people from other countries who had heard about the project. They wanted to use the questionnaire to collect data in their country and, to cut a long story short, they decided to create an international organization and a common repository. Over the years we have grown the number of member countries participating until we now have twelve. We have a small permanent staff who collects data, analyzes it, and publishes papers and books including our third edition of Practical Software Project Estimation that has just been published by McGraw-Hill. We also make our data available at a reasonable cost. So in summary, the ISBSG is a not-for-profit organisation that exists simply to try to help the IT industry improve its performance.

John - How large is the repository? I don't know how you would measure the size. Number of projects?

Peter - We have two repositories. One that is relatively recent focuses on the metrics of applications that are under maintenance and support. That currently has 500 applications. However, for this interview we will focus on the other repository comprises Development and Enhancement projects. That repository has 5,600 projects that come from all over the world and not only from the member countries.

John - One of the things I am really curious about is that since you are collecting data from all these countries, if you were to normalize all factors that influence project performance except the country where the project was done, do you see any differences? OR is a software project the same all over the world?

Peter - We don't report our findings by country. We want to protect the integrity of the data and want to prevent a country sending data to the ISBSG that has been doctored to make it appear that they have high productivity...that Outer Mongolia has the best software developers in the world.

John - Oh I see. So outsource your projects to Outer Mongolia where they will be done better?

Peter - I can answer your question in one way... we have done a study to compare onshore vs offshore projects. We have published this as a special report that is available from the web site:‘Outsourcing, Offshoring, In-house – how do they compare?’ If you look at what we call the project development rate, the number of hours to deliver a Function Point, then Offshore is about 10% more productive and speed of delivery is much better for offshore vs onshore. Unfortunately, the offshore projects deliver with a much higher defect rate.. almost three times higher.

John - I can see offshore projects being divided into two categories, one where the sourcing company has a division offshore and has worked together on several projects. The second is a sourcing company that enters into a contract with another firm like TaTa or Infosys to perform the project. I would imagine that the performance of the first type of project would be better than the second.

Peter - You may be right, but in our report we haven’t differentiated between the two types of offshore development: in-house and outsourced. However, I do think your idea has some validity because one of the significant drivers of project performance is communication. You would expect communication to be better within a single organisation even if the development team is offshore. The ISBSG does collect what we call ‘soft factors’, for some offshore projects communication was listed as a problem.

The general impact of "outsourcing" a project (not offshore), even if one division of a company doing work for another, is a 20% decrease in productivity rate and the defect densities are worse by about 50%, with speed of delivery being similar.

John - I used to work at IBM during the period when the company was moving to build a significant offshore capability and offer clients projects with mixed onshore/offshore delivery. We struggled with early deliveries and adapted our practices, infrastructure, and tools to get better. I suspect that in your repository if you captured the level of experience a team has had with doing offshore work, both in sourcing and delivering, you would find that the more experienced teams have better results.

Peter - I think that is a sound assumption. In general we have found that projects benefit from an experienced and stable team; an experienced project manager; known technology; and stable requirements, these all lead to successful outcomes. Other contributing factors include having an educated customer, single site delivery, and having a smaller number of deliverables (including documents).

Something to remember about the statistics I am giving you is that the ISBSG does not keep data on failed projects. We are given data on projects that have been delivered into production. So our data is biased. It is also biased because it is not a random survey of all projects but is data volunteered by organizations that probably have a bias towards getting better at Software Engineering. The mere fact that the organization is aware of the ISBSG is an indicator of its maturity. So our data probably represents the upper end of the industry.

John - Does this give users of the repository false expectations on what might be possible for their organization?

Peter - We try to offset that bias by providing a ‘how to use’ document with all the material we send out. We have what we call a reality check to make sure a consumer of the data is using it wisely.

John - So, lets move on to some Patterns of Success. We have already touched on many factors that lead to successful outcomes but tell me what you think the top few contributing factors are for a project to be successful.

Peter - The things that stand out are the size of the development team and the project complexity. The more complex a project the higher the risk of not delivering, and the larger the team size the lower the productivity. Another significant contribution to success is where an organization is pursuing process improvement via CMMI. Even organizations that have only a CMMI level two demonstrate higher productivity. You might think "Hold on, what about all the bureaucratic overhead to comply with CMMI?" Turns out these organizations have only slightly slower speed of delivery, they have somewhat higher productivity, and their defect rates are much better.

John - Why do you think that complying with a CMMI model makes a difference?

Peter - I think it reflects on something you said earlier... level of maturity. These people have looked at what they were doing and have decided that they could get better and are making an investment in doing so. This focus of the organization on process improvement instills a work attitude that influences day to day behaviors.

John - If all these factors are in place for a given project, will that project have an outcome that is an order of magnitude better then average? What is the distribution curve look like as we keep piling on improvements?

Peter - I have not mentioned yet the programming language which is a major influence on productivity. For all these improvements there is an initial negative impact at the time of introduction. For example if a company takes on a modern programming language the productivity is terrible and then over two years of use the productivity improves until it is twice the old baseline. So if it used to take 18 hours per function point it is now 9. These are big improvements.

Here is one example we have seen from our analysis... Putting aside the size of the project, then there is a correlation between team size and productivity. A team of 1-4 developers achieved a median productivity of 6 hours per function point. Teams of 5-8 achieve 9.5 hours per function point. More than 9 people in the team results in 13 hours per function point. So if you can split things up so that they are delivered by small teams then the overall productivity is greatly improved.

John - And that assumes that each developer has individual productivity equal to everyone else. And we know from historical data that there is a four fold difference from worst to best individual productivity. As a hiring manager we were always looking for the top talent individuals to bring into the company and create small teams with superstars. However to use a sports analogy, this could sometimes be like an all star game. A team brought together with superstars might under perform another team who has a better fit of the individuals and more experience working together. Does this kind of phenomena every show up in the reports in your repository?

.Peter - We can't collect the experience levels of the the individuals that make up the teams reported to our repository. We do collect some experience data on the project manager.

John - In the second topic of the interview, Failures to Launch, you have not collected data in the repository on failures. I think it would be a fascinating addition... to be able to submit an anonymous report on a failed project and the root causes for the failure.

Peter - That is an area where a researcher needs to ask probing questions. Capers Jones collects data in that manner and he also participates as an expert witness in litigation over failed projects. He would be a better source than the ISBSG. Can I switch the conversation around and talk about some more things that work really well?

John - Sure

Peter - We have done a lot of research on what works and does not work (a report called: ‘Techniques and Tools – their impact on projects). We have collected a lot of projects where iterative development is used, Rapid Application Development and Agile Development are examples. Agile projects have a 30% improvement in productivity, and speed of delivery is improved by about 30% as well. We don't have enough data yet to comment on quality improvements.

John - I have reviewed one of the ISBSG questionnaires and among a lot of questions you ask if the project is using a particular method such as Agile or TSP or RAD etc. As these reports flow into the repository over time have you seen any trends forming? An increase or decrease in use of a specific method?

Peter - The increase in people using Agile development is the most significant trend we have seen. We also saw a dramatic increase in the use of Java and C++ several years ago. There is always a problem with perceived technical silver bullets. As I said earlier, our data underlines that it takes time for a project team to become competent with a new method or new technology. So when there is a sudden general adoption of something new, we see a corresponding decrease in project performance until the ‘silver bullet’ is absorbed.

One of the very interesting findings we have at the ISBSG is that over the last fifteen years of collecting data we have seen no improvements in average productivity across the industry. All the improvements I mentioned earlier, have not been adopted universally by the industry and are offset by projects continuing to develop in a chaotic manner. So there has been no overall improvement in the way we develop applications. I got the ISBSG Analyst to look at the average productivity over time. We have grouped into groups of five year periods. This is the result (for all software development – New and Enhancements) shown as the median number of hours taken to produce a function point of software:

Median PDR (hours per FP)

1989-1993 7.6

1994-1998 6.7

1999-2003 11.0

2004-2008 12.6

John - Wow. That is a significant finding. Has quality improved or is it flat as well?

Peter – A recent analysis we did for the report: ‘Software Defect Density’ shows that it is flat. The ISBSG data shows no evidence of any improvement in defect rates over the fifteen year period. Certain projects using particular methods or tools can show dramatic improvements, but they are offset by projects with poorer quality results such that the overall median defect rate has not improved.

So much for silver bullets!

John - I guess that satisfies my need for a "Failure to Launch"... in this case the whole industry has been stagnant in improving project performance of the last decade.

John - So the last part of the interview is the NEXT BIG THING. And for you this will be interesting because I wonder if you see in the latest data submissions that ISBSG is getting any indicators of what might be an important trend?

Peter - Oh, that is a difficult one. What we are seeing is that the really successful projects tend to be following the fundamentals, they have small, stable teams, with an experienced project manager, working with languages and infrastructure that they are familiar with. The projects that follow these patterns always out perform any project trying something new. This may disappoint you but all the work we have done looking for real silver bullets tells us that there are none.

John - Thank you very much for your time and your insights

They came up with a questionnaire to collect that data. Then there were discussions with people from other countries who had heard about the project. They wanted to use the questionnaire to collect data in their country and, to cut a long story short, they decided to create an international organization and a common repository. Over the years we have grown the number of member countries participating until we now have twelve. We have a small permanent staff who collects data, analyzes it, and publishes papers and books including our third edition of Practical Software Project Estimation that has just been published by McGraw-Hill. We also make our data available at a reasonable cost. So in summary, the ISBSG is a not-for-profit organisation that exists simply to try to help the IT industry improve its performance.

John - How large is the repository? I don't know how you would measure the size. Number of projects?

John - One of the things I am really curious about is that since you are collecting data from all these countries, if you were to normalize all factors that influence project performance except the country where the project was done, do you see any differences? OR is a software project the same all over the world?

John - Oh I see. So outsource your projects to Outer Mongolia where they will be done better?

John - I can see offshore projects being divided into two categories, one where the sourcing company has a division offshore and has worked together on several projects. The second is a sourcing company that enters into a contract with another firm like TaTa or Infosys to perform the project. I would imagine that the performance of the first type of project would be better than the second.

Peter - You may be right, but in our report we haven’t differentiated between the two types of offshore development: in-house and outsourced. However, I do think your idea has some validity because one of the significant drivers of project performance is communication. You would expect communication to be better within a single organisation even if the development team is offshore. The ISBSG does collect what we call ‘soft factors’, for some offshore projects communication was listed as a problem.

The general impact of "outsourcing" a project (not offshore), even if one division of a company doing work for another, is a 20% decrease in productivity rate and the defect densities are worse by about 50%, with speed of delivery being similar.

John - I used to work at IBM during the period when the company was moving to build a significant offshore capability and offer clients projects with mixed onshore/offshore delivery. We struggled with early deliveries and adapted our practices, infrastructure, and tools to get better. I suspect that in your repository if you captured the level of experience a team has had with doing offshore work, both in sourcing and delivering, you would find that the more experienced teams have better results.

Something to remember about the statistics I am giving you is that the ISBSG does not keep data on failed projects. We are given data on projects that have been delivered into production. So our data is biased. It is also biased because it is not a random survey of all projects but is data volunteered by organizations that probably have a bias towards getting better at Software Engineering. The mere fact that the organization is aware of the ISBSG is an indicator of its maturity. So our data probably represents the upper end of the industry.

John - Does this give users of the repository false expectations on what might be possible for their organization?

John - So, lets move on to some Patterns of Success. We have already touched on many factors that lead to successful outcomes but tell me what you think the top few contributing factors are for a project to be successful.

Peter - The things that stand out are the size of the development team and the project complexity. The more complex a project the higher the risk of not delivering, and the larger the team size the lower the productivity. Another significant contribution to success is where an organization is pursuing process improvement via CMMI. Even organizations that have only a CMMI level two demonstrate higher productivity. You might think "Hold on, what about all the bureaucratic overhead to comply with CMMI?" Turns out these organizations have only slightly slower speed of delivery, they have somewhat higher productivity, and their defect rates are much better.

John - Why do you think that complying with a CMMI model makes a difference?

Peter - I think it reflects on something you said earlier... level of maturity. These people have looked at what they were doing and have decided that they could get better and are making an investment in doing so. This focus of the organization on process improvement instills a work attitude that influences day to day behaviors.

John - If all these factors are in place for a given project, will that project have an outcome that is an order of magnitude better then average? What is the distribution curve look like as we keep piling on improvements?

Peter - I have not mentioned yet the programming language which is a major influence on productivity. For all these improvements there is an initial negative impact at the time of introduction. For example if a company takes on a modern programming language the productivity is terrible and then over two years of use the productivity improves until it is twice the old baseline. So if it used to take 18 hours per function point it is now 9. These are big improvements.

John - And that assumes that each developer has individual productivity equal to everyone else. And we know from historical data that there is a four fold difference from worst to best individual productivity. As a hiring manager we were always looking for the top talent individuals to bring into the company and create small teams with superstars. However to use a sports analogy, this could sometimes be like an all star game. A team brought together with superstars might under perform another team who has a better fit of the individuals and more experience working together. Does this kind of phenomena every show up in the reports in your repository?

.Peter - We can't collect the experience levels of the the individuals that make up the teams reported to our repository. We do collect some experience data on the project manager.

John - In the second topic of the interview, Failures to Launch, you have not collected data in the repository on failures. I think it would be a fascinating addition... to be able to submit an anonymous report on a failed project and the root causes for the failure.

John - Sure

Peter - We have done a lot of research on what works and does not work (a report called: ‘Techniques and Tools – their impact on projects). We have collected a lot of projects where iterative development is used, Rapid Application Development and Agile Development are examples. Agile projects have a 30% improvement in productivity, and speed of delivery is improved by about 30% as well. We don't have enough data yet to comment on quality improvements.

Another method that seems to work very well is Joint Application Development. Productivity is 10% above average and speed of delivery is 20% above average.

Some things don't seem to make much difference. For example, Object Oriented Development does not seem to improve productivity or speed of delivery over the average.

I don't know if people are still using CASE tools but they had a positive impact on project performance, particularly with much lower defect rates. Given the early rush to CASE tools and the investment in learning to use them, I am not sure people got the dramatic improvements they had hoped when the tools were so popular.Some things don't seem to make much difference. For example, Object Oriented Development does not seem to improve productivity or speed of delivery over the average.

John - I have reviewed one of the ISBSG questionnaires and among a lot of questions you ask if the project is using a particular method such as Agile or TSP or RAD etc. As these reports flow into the repository over time have you seen any trends forming? An increase or decrease in use of a specific method?

One of the very interesting findings we have at the ISBSG is that over the last fifteen years of collecting data we have seen no improvements in average productivity across the industry. All the improvements I mentioned earlier, have not been adopted universally by the industry and are offset by projects continuing to develop in a chaotic manner. So there has been no overall improvement in the way we develop applications. I got the ISBSG Analyst to look at the average productivity over time. We have grouped into groups of five year periods. This is the result (for all software development – New and Enhancements) shown as the median number of hours taken to produce a function point of software:

Median PDR (hours per FP)

1989-1993 7.6

1994-1998 6.7

1999-2003 11.0

2004-2008 12.6

John - Wow. That is a significant finding. Has quality improved or is it flat as well?

So much for silver bullets!

John - I guess that satisfies my need for a "Failure to Launch"... in this case the whole industry has been stagnant in improving project performance of the last decade.

Peter - Oh, that is a difficult one. What we are seeing is that the really successful projects tend to be following the fundamentals, they have small, stable teams, with an experienced project manager, working with languages and infrastructure that they are familiar with. The projects that follow these patterns always out perform any project trying something new. This may disappoint you but all the work we have done looking for real silver bullets tells us that there are none.

John - Thank you very much for your time and your insights

Wednesday, January 12, 2011

Patterns of Success - Dean Douglas

I worked for Dean Douglas for a few years while I was at IBM. He was the General Manager of our Emerging Business Opportunity (EBO) business unit for Wireless Computing. The EBO was based on a business model promoted in the book Alchemy of Growth. The idea was that in a large company like IBM, one needed a focused business unit with a mission to provide emerging technology solutions to customers by working with and through the traditional business units in IBM. So Dean had a worldwide organization with a combination of sales, and services delivery capacity. My group was the services delivery piece.

Today, Dean is the CEO of the Westcon Group.

He is the first CEO I have asked to give his views on what are patterns of success.

John - Dean, thanks for taking the time to speak with me today. Before we get into the core interview questions I did have another question. I saw on the Westcon website that you had been named one of the top 25 Channel Mavericks of 2010. What did you do to earn that recognition?

Dean - I've thought about that too and can't point to just one thing we may have done. Obviously, I am very flattered by the award. I do think it is more about the way we have approached the marketplace. We have not approached the marketplace in a traditional manner or the same as our competition. We looked at where the opportunities were in the marketplace and took an aggressive view of these opportunities but not a conventional view. For example, in the area of cloud computing. Many distributors, and resellers or channel partners see cloud computing as a threat to their traditional data center solution. Westcon sees it as a positive opportunity. If an enterprise depends more and more on cloud services then they are going to need more robust security, more robust network infrastructure. They will need hardware to allow near local response times for those applications that cannot afford the latency of the cloud. So we see cloud computing as way to increase our business-as-usual in addition to the opportunities it presents in other technology areas that we can pursue.

John - So when there is a disruptive technology (of which cloud computing is an example), what Westcon can do is understand the impact of the technology and help its customers with an approach on how to be successful with that disruption.

Dean - To a large extent you are absolutely right, but there is a nuance to what you said. There is a tremendous opportunity in what I call unintended consequences. Take cloud based computing – which we have readily embraced. We are finding that we needed to upgrade our networks, improve our security, at the end user point as well as across the network to make this happen. And those required improvements were not contemplated when we embarked in moving to the cloud. And this translates over to our customer partners as well.

John - So you can encapsulate these learnings around unintended consequences and pass them on to the system integrators and VARs that are your customers.

Dean - Exactly. We have marketing programs in place so our customers are articulate and not just parroting the Westcon point of view.

John - Over the years if you were to take the top 10% successful Westcon customers and compare them to the bottom 10%, are there any patterns that you seeing the top 10% doing that differentiates them?

Dean - One of the hallmarks of success is that there is real customer empathy. You must understand the challenges the customer is facing and the opportunities for success in a way that is consistent with the culture, the business values, the approach, and the business metrics that customer has. And there is no question that by really understanding these items you will be able to convert it to a set of integrated offerings that address the challenges and provides a platform for the opportunity. By the way, those could be tangential to the actual product being sold. The product sale may be a facilitator or it may be a platform for providing other capabilities that had not been considered. So having that open view to what a customer may be wrestling with, as opposed to having preconceived notions of the solution, is what differentiates the most successful of the Westcon customers.

John - How to these companies achieve that customer empathy? Does that mean that they have a larger population of customer facing people, or a more skilled sales force, or what?

Dean - I do not think it requires a larger sales team. But it does require a sales team that is experienced and highly skilled. They should also be conversant in alternative approaches so that they can design a solution that is best for the customer. The training may be in a technical area but it could also be in an awareness sense as well. How do you probe, how do you ask questions that uncover what the customer is really doing and thinking? However, I am surprised by how many sales people miss this and they could have been in sales for a long time. For example, at Westcon we have a capital committee that gets together to make decisions on purchases of anything over $50K. And our capital committee requires the submission to have a real ROI business case. So, if I were selling to Westcon and had something more expensive than $50K, I should know about the capital committee and its decision making process. I should help my customer in Westcon to put together a good ROI, maybe suggesting that other groups in Westcon could also benefit from the purchase and be brought into the sale.

John - So more important than knowing 100 things about a Cisco router, a sales person should understand the lay of the land in that customer and the decision-making forces in play.

Dean - You and I would think of this as a fundamental diagnostic. The more we know about what is going on with our customer the better able we can craft a successful solution for that customer.

John - Let me take it back to that CRN Top 25 announcement. One of the items mentioned was that you had set up a training center for your customers. Does this center provide more than just technical training? Do you also offer up some training on how to be empathetic with a customer?

Dean - Actually it goes beyond both of those. We decided to open a center in Denver and in Brussels to start with. We call these LEAP Centers.... Learning / Experience / Architect / Plan. What we have created in those centers is a real-world data center. In that center are all the products a real data center would have. Servers, networks, routers, storage, both products that Westcon distributes, as well as some products we don’t distribute, but will likely be in the centers that our customers sell into. By having a complete array of products and technologies we can give our resellers the opportunity to come in and educate themselves on what the state of play is in the marketplace today with current hardware and software. We offer classroom instruction as well as true hands on learning. This is the Learning / Experience piece. The second part of the center is to provide our customers a place to consider how they would change or expand their portfolio to deal with the changing marketplace. For example, if they are a networking VAR, you can learn how to have relevance in a network-centric data center or network storage data center. Adding derivative products and capabilities to the VAR’s core expertise. That is the architecture piece of LEAP. Finally, we can bring the end user into the facility to plan on how to bring these solutions to the marketplace. And if your customers only have IBM and Cisco and EMC we can rapidly configure an environment in the LEAP facility to match that product profile. The experience an SI or VAR can get in our facility will give them real confidence that the products they are proposing to their customers will really solve that customer's challenges.

John - I can imagine that a LEAP Center would be in high demand and would need to be reserved so that one of your SI/VARs could come in and work extensively with the products you have.

Dean - Well it turns out that with modem virtualization technology we can offer a lot of the center capability remotely and rapidly configure the environment. For example, the Westcon team in Brazil uses our Denver center for their customers.

John - Do other distributors offer a LEAP-type Center?

Dean - Everyone has a demo center, but Westcon is the only distributor that I know of that offers the kind of capabilities we have talked about to help SI/VARs.

John - Do you also use these centers as a place to bring in emerging technologies in order to evaluate and showcase what might be coming over the horizon? For example, I attended the BladeSystems Insight Summit earlier this year and saw an immersion cooling technology from Hardcore Hardcore Computer that I thought was very intriguing. Would this be something you might use on a couple racks in your LEAP data center?

Dean - Absolutely. We have to be careful in how we do that so that the products have real applicability, but we want to educate our customers on the emerging trends that will affect their business.

John - You had mentioned one pattern of success is having empathy with your customer. Any other patterns?

Dean - Yes. This may sound almost intuitive, you really must have currency in what is happening in the marketplace today to be able to really be effective. If you step away from the marketplace for a year or two, you are lost. So successful VARS/SIs must invest in continuous education of their workforce. What Westcon has done is to set up an office of the Chief Technology Officer. Our CTO understands the technical trends in the marketplace, what new technologies are being adopted, and how they are being adopted in specific applications. With this insight, Westcon can recruit new suppliers to extend our portfolio.

John - With this understanding, do you package it up and offer it to your customers? If the SI/VARs challenge is to keep current and Westcon can facilitate that process then your customers become more competitive.

Dean - Exactly, we use blogs, emails, Twitter, newsletters, whatever mechanism is the best for our customers to communicate this information. Some of this information is regulatory and how the regulations can create opportunities for growth.

John - You mentioned a number of outbound messaging mechanisms. Do you also have contact centers to support queries from your customers?

Dean - We actually try to avoid call centers. We want all our customers to have a primary Westcon contact for any issues or questions. We think that having a relationship with the customer is an important part of our service. In fact, in a recent 2010 survey that we conducted with over 1600 partners worldwide, our front line account management team was rated as one of the top factors contributing to our partners success.

John - I wonder if a pattern for the top 10% is that they interact with Westcon more frequently. If you were to correlate annual sales with contact frequency would that line up?

Dean - If you look at the Westcon customer base 60% are traditional resellers, and some of them are very large companies.... several hundred million dollars a year in sales. But the majority of the resellers are sub $10M per year. The remaining 40% of our revenues come from global systems integrators, Federal systems integrators, and large service providers. Our largest customer is a large telecommunications company. For these very large customers they have developed all the technical resources that they need around a given product or technology. There are other services that we provide our large customers beyond technical insight. They could buy direct from the manufacturer, but they buy from Westcon because we can offer global logistics, global deployments that can address county specific compliance issues, backup/recovery support, import/export support. Basically the high end customers have a different interaction and benefit in different ways by working with Westcon, then do the high number of smaller customers. We have to be able to succeed with both types.

John – Let’s shift gears and look at some Failures to Launch. Could you tell us about companies that tried to work with Westcon and the reasons that they might have failed?

Dean - The most fundamental thing that I have seen is a company that goes into a competitive deal with a set plan as opposed to maintaining flexibility. They have a preconceived notion of what the customer needs and push that aggressively to that customer. They may have done all the due diligence but the requirements at the time of the deal may have shifted and they just do not listen.

John - Do you think this is because they are trying to be more economical and not invest as much cost of sales?

Dean - The ones that I have been personally involved in seem to apply the same amount of effort but the sales team has this preconceived notion of what the customer will say and didn't clarify by probing to validate the information. They just assume the response is going to be what they originally believed and jump rapidly to their offering. Sometimes the SI/VAR spends too much investment up front building a one size fits all solution when they should listen to exactly what their customer is asking for.

John - What do you think the NEXT BIG THING will be? I am looking about three years over the horizon. It could be an emerging technology OR a new business model for Westcon.

Dean - OK. One of each.

Dean - I think in the application of technology it will be the emergence of the virtual desktop. A device that does not have local storage but depends on connectivity to a server. These virtual desktops might be a laptop or a tablet. It requires network attachment to the server in order for its application portfolio to be used. Part of the reason for the shift will be economic. And the lower cost of the thin client is a fraction of the total cost of ownership. I think that the simplification in distribution, support, and security of data will be the significant contributors to cost reduction.

John - Would a concrete example be iPads, Chrome OS, and even smartphones?

Dean - I personally think that the smartphone has too small a footprint to be a total desktop replacement but the iPad is a great example of the virtual desktop. I think you are seeing the traditional desktop vendors like VMware, Citrix, and Microsoft shape their products to compete in this emerging space. For Westcon, we have to think about the impact of desktop virtualization to the network and data centers for security, load balancing, data management, etc.

John - And the second NEXT BIG THING?

Dean - Today we see a lot of very specialized hardware in a data center that supports different specific services. Three years might be a little too soon, but I think we will start to see the replacement of these specialized hardware platforms with more generic, lower cost hardware computing modules. These modules have a common virtualization environment and the specific functionality is provided entirely by a software layer on top.

John - And how does this impact Westcon?

Dean - The solutions that we offer will become more complex in that we have to do a lot more configuration and integration of the software that gives these commodity modules the applications that our SI/VARs need. I think with all this software we will have a different economic model around the licensing, maintenance, and support. It might look like a long term annuity type model. And Westcon would offer extensions and specializations to base software modules much like service providers like Verizon do today for smartphones.

John - Thank you again for providing your insights to the Patterns of Success.

Friday, January 7, 2011

The CES Index

Like many of my technical colleagues, I have been following the Consumer Electronics Show for new and cool gadgets. Meanwhile, I have been asked to offer some consulting advice on Near Field Communication (NFC).

One of the factors in the adoption of NFC will be the number of devices being manufactured with an NFC chip. You also have to have enough readers, and merchants, and banks, and service providers, and credit card companies. Lots of factors involved in overall adoption.

But I wanted to check on any announcements or exhibitors at CES that was focused on NFC so off I went and tried a search

Only 9 results with all but one being a general press release on their TechZones. So not lots of focus on NFC solutions at CES this year.

Several years ago when I was a seminar instructor, students would often ask me about the popularity of different programming languages, operating systems, hardware platforms etc. I told them about the Barnes&Noble index. Go to your Barnes&Noble and look at the bookshelves. Is Java more popular then C++? How about Visual Basic? Just measure the number of shelf feet dedicated to a topic to measure its relative importance to the industry.

Would a CES index apply in giving a quick and dirty forecast of industry trends? So I tried to search on a bunch of keywords and here are the results. For any given category I took the lowest absolute result and used that as the denominator to normalize the index. I also compared the CES Index with the relative search frequency as reported by Google Trends over the last thirty days.

Popular Vendors

Lots of Microsoft at CES. Specifically there were 1370 search results and only 5 for RIM. So the CES Index is 1370/5 = 274. In my opinion this is a combination of actual use of Microsoft products by many exhibitors plus the fact that Microsoft outspent many other companies at CES. How else do they get Mr Ballmer to be the keynote speaker?

OS Platforms

The Google Trends (red) for the last 30 days did not have a very high volume of searches for Windows Mobile relative to the other OS'. General Windows was strong at CES and also on the web. What surprises me in this data set is the relatively weak showing of Android at CES.

Service Providers

Verizon was a big player at CES and for internet searches in general.

Networks

At both CES and on Google Trends, HSPA+ is not really a focus. 3G continues to dominate with a close second on the emerging 4G. I think LTE was more prevalent at CES specifically because of the Verizon announcements.

Emerging Techs

Finally, what drove me to this interesting exercise, NFC like RFID has relatively low occurences (I would have not expected RFID to be discussed much at CES). Cloud Computing is big, but the hot tech of the show and the month is Tablets.

Subscribe to:

Posts (Atom)